Summary

This piece describes the steps I took to back up the contents of my WordPress sites on paper. In fact, with appropriate modifications, Method 1 would apply to pretty much any website (whether built with WordPress or not), except for AJAX-heavy websites where scraping the HTML content requires some extra effort.

Contents

Motivation

Storing data digitally has many benefits over storing data on paper. However, digital data is also more brittle in several ways. Digital files may be vulnerable to format rot over time, and the hardware that stores digital files could break or fail to be supported in the future. In addition, digital files require a computer and electricity in order to be read, while plain text on paper does not. So information stored on paper seems particularly resilient against civilizational-collapse scenarios.

Of course, paper backups are more of a pain to use for recovery than are backups already in electronic format, but presumably paper backups could be scanned and read back into electronic text using OCR.

In my opinion, if you back up a website on paper, you should print out the raw HTML of each page rather than copying and pasting text directly from the web browser, because copying and pasting text from a browser (in addition to not being scalable) loses hyperlinks, as well as HTML comments, custom JavaScript, and other information that you can only see in a page's markup.

Gathering the website's text

Method 1: Download website and extract relevant HTML

My preferred way to back up a website is as follows. First, download the website using a program like HTTrack or SiteSucker. Second, run extract_valuable_HTML_from_WordPress_site_download.py (or a similar program of your own) on the website-download folder. For example, if the downloaded website is in a folder mywebsite/, then put the Python program in the parent folder of mywebsite/ and run

python extract_valuable_HTML_from_WordPress_site_download.py mywebsite

This will create a single output file mywebsite_extracted.html containing all the interesting HTML contents from your website without most of the extra boilerplate stuff that WordPress adds to each HTML file. You can open this .html file in a browser to examine the contents.

extract_valuable_HTML_from_WordPress_site_download.py works for the websites that I care about, but its simple heuristics for identifying the start and end of the "interesting" HTML contents may not work automatically in your case. If so, play around with the program for your situation. In the worst case, you could just collect all the HTML text, including boilerplate, into a file for printing, though this will increase the number of pages you have to print out.

Once you're satisfied with the precision and recall of this extraction process, copy and paste the output .html file's contents into a word processor and convert to PDF. This PDF will be what you print out. You might make the text size relatively small as long as this doesn't make the printed text more vulnerable to degradation. (For example, if the text size is too small, then ink fading or mold seem more likely to render the text unreadable.)

Method 1 is nice for two reasons:

- It works on any website, not just one you own.

- All the information extracted is from the public web, so you don't have to worry about accidentally printing out sensitive information.

Method 2: Download your WordPress database

If you have direct access to your site's WordPress database, you can get the relevant text for printing out from there. I'll describe the steps for doing this below, but I should point out a warning. According to some answers on Quora, as well as a friend of mine, a complete WordPress database (a big .sql file dump) contains some potentially sensitive information. Therefore, I assume that you should extract from the .sql file only the text of your pages/posts and not the other database tables. I don't know enough about WordPress to say whether this is sufficient to remove all sensitive information, so use this approach at your own risk.

Following are the steps you can use to extract the published versions of the pages on your websites for printing.

First, go to phpMyAdmin. For me, that's accessible through HostGator's Control Panel page:

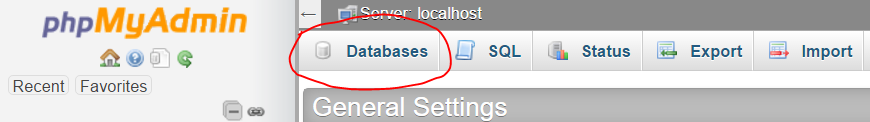

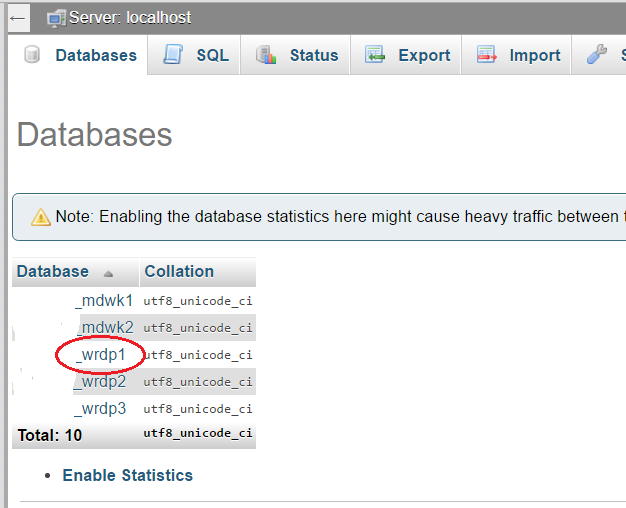

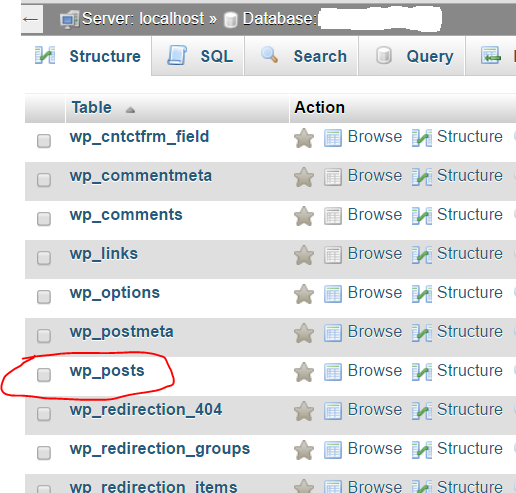

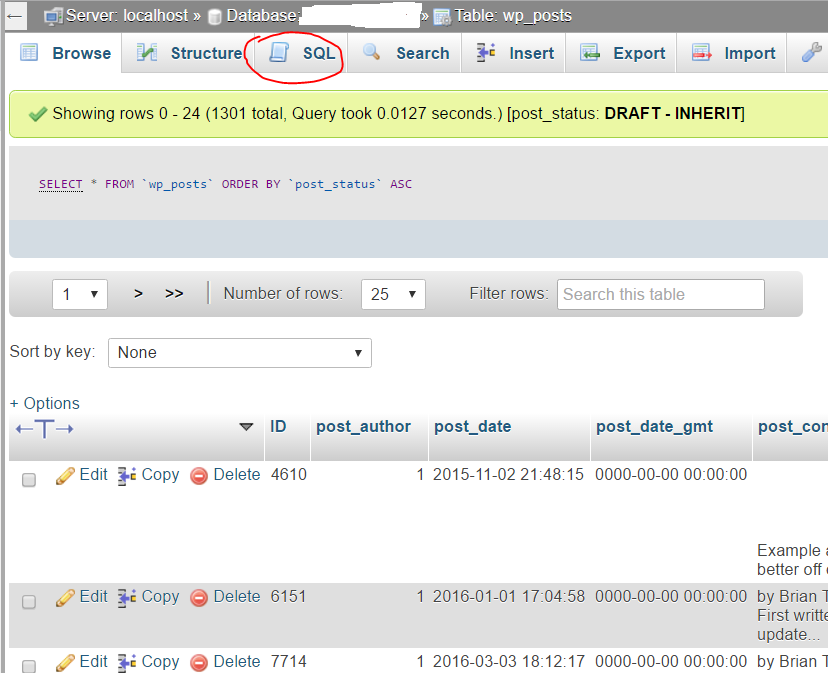

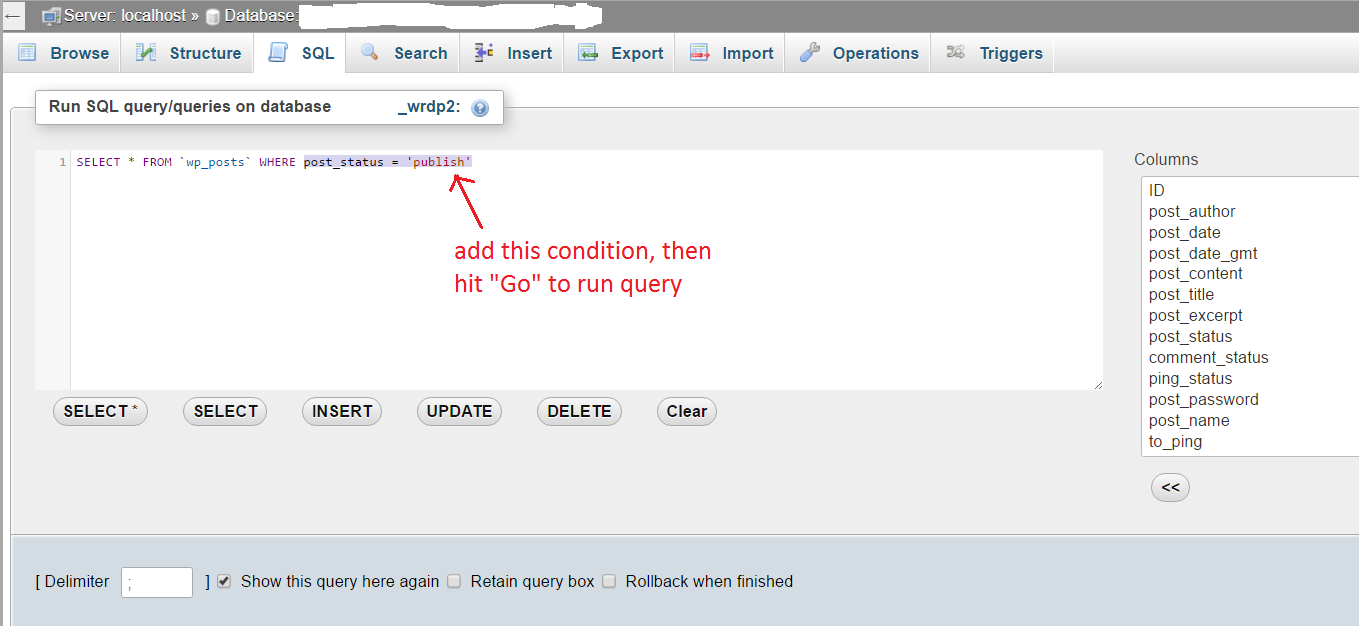

Then click on the circled red items in the next few screenshots:

Then I edit the SQL query as shown below to get only published posts. The reason for this is that if you don't filter down to only published posts, you'll get several copies of each post. In my case, I was getting 3-5 or more copies of each post, which would have translated to an extra 1000+ pages to print out.

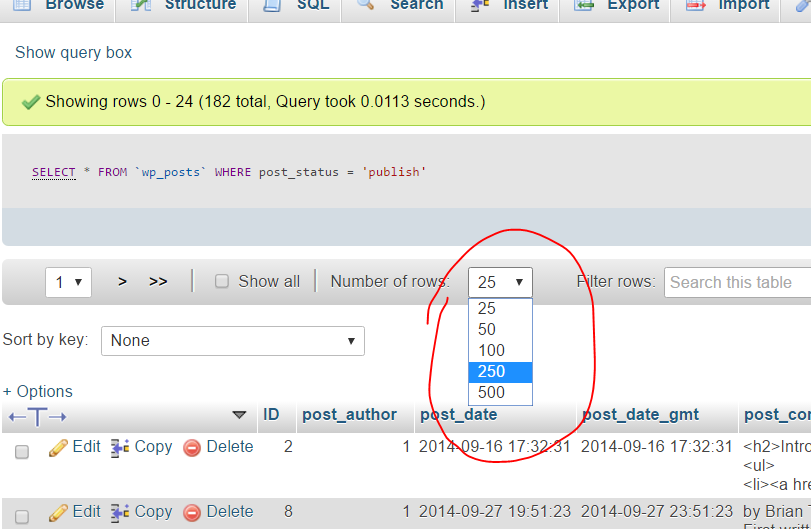

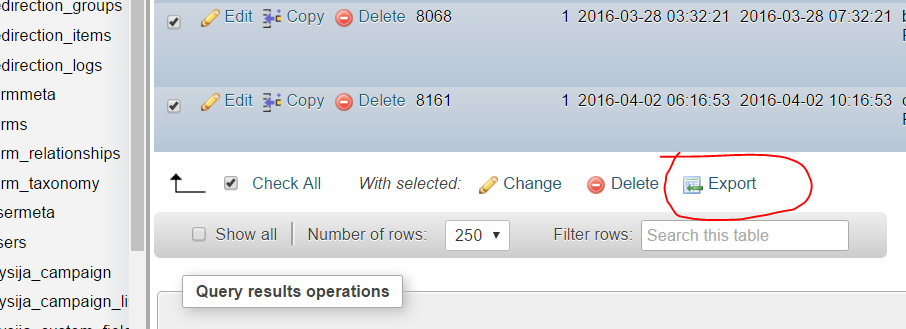

Once you get the results of the query, select all of the posts. To do this, I first showed a number of rows bigger than the total number of posts:

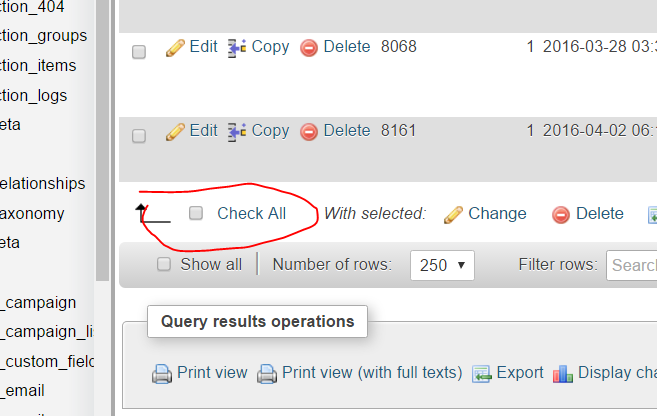

Then I went to the bottom of the page and clicked "Check All":

Then "Export":

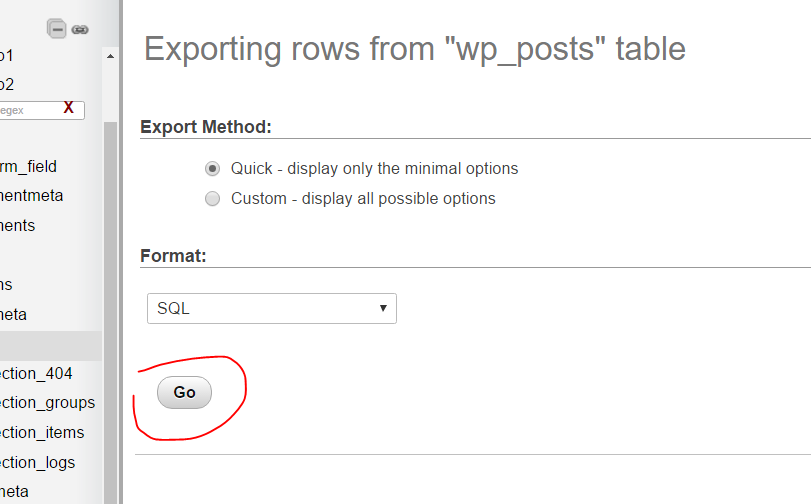

Then I clicked "Go" on the next screen:

This started a download of a .sql text file containing the contents of my posts. I did spot checks to verify that snippets from various pieces on my site were indeed contained in this file exactly once. Then the file was ready to be printed.

Formatting text for printing

I copied and pasted the file text into Microsoft Word, set the margins ("Page Layout" -> "Margins") to 0.5 inches on all sides, and set the text size to 8. Of course, you can choose different settings if you want.

Including PDFs

If you have some articles on your website in PDF/Word/etc. formats, make sure to include them in the set of files to print as well, since they won't be included in the HTML / database dump described above.

I wrote a Python script that merges all the PDFs in a website download into a specified number of combined PDF files: find_and_merge_PDFs_in_directory.py . For example, if the downloaded website is in a folder mywebsite/, and if you want the PDFs merged into 5 big output files, then put the Python program in the parent folder of mywebsite/ and run

python find_and_merge_PDFs_in_directory.py mywebsite 5

If you want all the PDFs merged into one big output file, replace 5 in the above command with 1. I allow the option of splitting into several output files in case a single combined PDF file would be too big. See the comment at the top of the script file for further instructions.

A combined PDF file sometimes seems to have different length/width dimensions for different PDFs, and some PDFs may be rotated 90°, but I found that these irregularities are tolerated without a problem by Office Depot's printing service (described in the next section).

For some reason, you might find that a few PDF files can't be processed properly by my Python script. If that's the case, you'll see "ERROR" messages printed out, saying that you need to separately add the PDFs that don't merge properly to the list of PDFs you upload to Office Depot for printing. In my tests, I encountered two PDF files that couldn't merge, but I was still able to upload them to Office Depot for printing without problems. (Apparently Office Depot handles PDFs more robustly than my program does.)

Finding a printing service

I do paper printouts using Office Depot. To do this, I go to http://www.officedepot.com/ , log in, go to "Print & Copy", click "Document Printing Services", and click "Manuals". If you're trying to print out more than ~500 pages (or ~250 sheets of paper when printed double-sided), you may have to instead click "Presentations" under "Document Printing Services" and then choose "Presentation Binders", since binders can hold more total sheets of paper.

From here you can upload your files. There's a limit on how many files you can upload simultaneously, so you might need to merge PDFs before uploading them if you have lots of them to print out. You can print many hundreds of pages at once and have them delivered to your house for less than $100.

These steps work as of July 2017 in the USA, but some things may have changed by the time you're reading this.

Fireproofing

You may want to store the paper printouts in a fireproof safe to guard against your house burning down. That said, in the event of most house fires, the cloud versions of your data will still exist, so you can just make new printouts. The exception would be if a severe disaster capable of destroying cloud data also caused widespread fires; in this (rather unlikely) event, a fireproof container would be useful.