Summary

When downloading whole websites in order to back them up, it sometimes helps to add configuration details to the downloading program in order to make the download work properly or to avoid retrieving lots of redundant files. This page lists the special settings that I apply when downloading certain websites using SiteSucker.

effective-altruism.com and lesswrong.com

as of 21 Jul. 2017

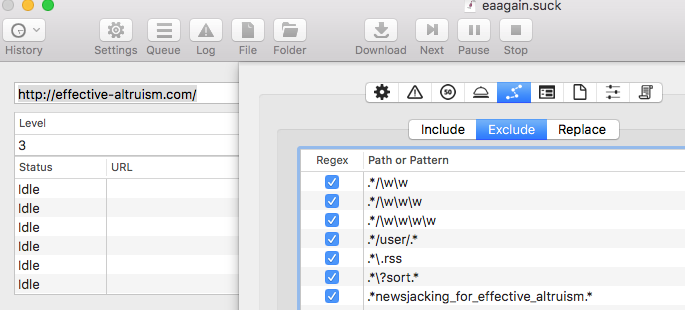

In SiteSucker, I add the following regex exclusion rules:

.*/\w\w .*/\w\w\w .*/\w\w\w\w .*/user/.* .*\.rss .*\?sort.* .*newsjacking_for_effective_altruism.*

Here's a screenshot of what this looks like in SiteSucker:

The .*/\w\w and .*/\w\w\w and .*/\w\w\w\w rules exclude links to specific comments on a post, which are redundant because the main post HTML file already includes comments. (Maybe this rule omits very long comment threads that aren't fully displayed on the main post page? But those aren't very common, so probably it's ok to lose them.)

The .*/user/.* rule omits user pages, which are redundant because they only show comments that can be found on posts.

The .*\.rss rule avoids .rss files that duplicate the corresponding post's content.

The .*\?sort.* rule omits different methods of sorting comments on a post.

What about the .*newsjacking_for_effective_altruism.* rule? This is just a hack. I found that SiteSucker was freezing up when "Analyzing" this particular page for some reason. I don't know why, but omitting this page made the rest of the download work fine.

felicifia.org

as of 7 Aug. 2017

In SiteSucker, I add the following regex exclusion rules:

.*#p.* .*memberlist.* .*p=.* .*php\?mode.* .*search.* .*sid=.* .*posting\.php.* .*view=print.* .*www.* .*php\?t=.*

Using these rules, Felicifia downloaded very cleanly, only one copy of each forum thread. I checked 10 diverse forum threads on the live website, and all 10 of those threads were retrieved in my download (recall = 100%).

If you don't use the .*www.* exclusion rule, you get roughly two copies of everything—one with and one without www. at the beginning. And if you don't use the .*php\?t=.* exclusion rule, you get two copies of each thread—one where the url specifies both the forum and the thread number, and one where only the thread number is specified.

If you use the .*sid=.* exclusion rule, the download may not work because the forum urls sometimes include &sid= in them. Unfortunately, if you allow &sid= urls, the download may never end because the session id keeps changing over time. Sometimes the urls don't have &sid= in them, so that applying this exclusion rule works. I don't understand why it sometimes does and sometimes doesn't work to use the .*sid=.* exclusion.