Contents

- "There are bigger problems to worry about"

- What's the difference between pain and preferences?

- Information integration in food webs

- Bitstring avoidance and pain avoidance

- Feeling free to not finish tasks

- Planned shower thoughts

- Disagreeing without explanation

- "Probabilities are not promises"

- Todo-list "crumbs"

- Small non-human carnivores eat a lot of animals

- Why can't humans fall asleep voluntarily?

- Machine learning as memorization

- Are simulation environments a limiting factor for real-world AI?

- Machine-learning work makes you appreciate errors

- Importance and difficulty of good sleep

- Prison violence is "cruel and unusual punishment"

- Multicellular organisms are water reservoirs

- On criticizing other cultures

- Evolving to eat plastic

- A possible test to distinguish pleasure vs. cravings

- Cryonics

- Motivation vs. "raw intelligence"

- Marriage name changes

- Pretending that work is play

- IQ reductions generally worse for altruists than egoists

- My cartoon understanding of Hebbian learning

- Being surprised by interlocutors in dreams

- On banning white supremacists

- Non-trivial instantaneous learning is science fiction

- Everything is kind of a simulation

- Why I'm wary about multiple-choice questions

- On not getting depressed by the world's suffering

- Peak shift and hair length

- Should goals always be open to revision?

- When OCD is rational

- Using novelty detection when researching

- Tyranny of adults

- Why I personally don't like prize contests

"There are bigger problems to worry about"

26 Dec. 2017

People sometimes reason in ways like this: "I used to think that avoiding stepping on bugs was important. But then I realized that untold numbers of bugs are getting crushed, eaten, poisoned, and so on every day. The number of bugs that I step on is trivial by comparison. So I'm not going to worry about stepping on bugs anymore." This is the kind of reasoning that the commonly told "Starfish Story" aims to counter.

There's a right way and a wrong way to make use of the idea that your impact is tiny compared with the magnitude of the problem. The wrong way is to give up entirely because the task seems hopeless. But the right way is to ask whether your current approach is inefficient and whether you could be helping on a much larger scale in some other way. The fact that your efforts are small compared with the problem is actually relevant to how motivated you should be to continue your current approach, but that's because it suggests that you might want to look for a different, higher-impact approach.

Edmund Burke is associated with the quote that "No one could make a greater mistake than he who did nothing because he could do only a little." This is not quite right, because the quantitatively bigger mistake would be made by him who only did a little when he could have done a lot. The wrong way to respond to the "there are bigger problems" idea is to do nothing instead of doing a little; the right way to respond is by doing a lot instead of a little.

All of that said, I think there can often be instrumental and perhaps personal/spiritual reasons to sometimes still care about avoiding small-scale harms in addition to focusing on the bigger picture.

What's the difference between pain and preferences?

26 Dec. 2017

Different utilitarians disagree about whether hedonic experiences or preferences should determine an individual's welfare. Subjectively, we can notice how pain/pleasure and preferences don't always coincide. However, explaining this difference at the level of brain functions is slippery.

I expect that a complete functionalist account of the distinction between pain/pleasure and preferences would be complex and partly arbitrary, in a similar way as a complete account of the differences between "liberty" and "justice" would be complex and partly arbitrary. However, here I mention a speculative picture that I made up without consulting the existing literature on this topic.

It seems to me that preferences are an important component of morally important pain. In pain asymbolia, "patients report that they have pain but are not bothered by it". Because this kind of pain doesn't involve any preference to avoid it, I would guess that it doesn't count as bad even according to many hedonistic utilitarians. This suggests that maybe the morally important hedonically based preferences can be seen as subsets of the general class of preferences. Hedonistic preferences tend to be the more "raw" drives that are associated with our reward/punishment systems. In contrast, other kinds of preferences may be based more on "higher reasoning" systems or at least other non-reward/punishment inputs.

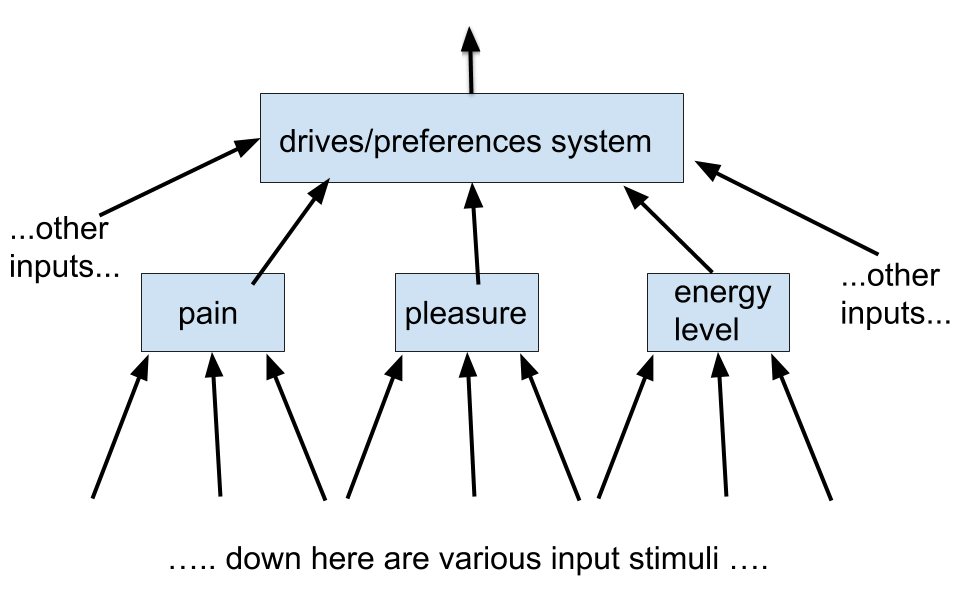

One way oversimplified picture might be as follows. Imagine a robot that computes a variety of variables based on its present conditions, such as "pain", "pleasure", "energy level", "adherence to rules", etc. These variables feed into a "drives/preferences system", which decides upon the agent's current motivations. The agent's current pain level is usually a strong input to its overall preferences, but pain can sometimes be overridden by other factors. In robots with pain asymbolia, the input channel that's supposed to feed the "pain" variable into the motivation system is broken. Hedonistic utilitarians care about the robot's drives that are based primarily on its "pain" and "pleasure" variables rather than on other factors, while preference utilitarians care about any of the preferences that the drives/preferences system computes.

One way oversimplified picture might be as follows. Imagine a robot that computes a variety of variables based on its present conditions, such as "pain", "pleasure", "energy level", "adherence to rules", etc. These variables feed into a "drives/preferences system", which decides upon the agent's current motivations. The agent's current pain level is usually a strong input to its overall preferences, but pain can sometimes be overridden by other factors. In robots with pain asymbolia, the input channel that's supposed to feed the "pain" variable into the motivation system is broken. Hedonistic utilitarians care about the robot's drives that are based primarily on its "pain" and "pleasure" variables rather than on other factors, while preference utilitarians care about any of the preferences that the drives/preferences system computes.

Information integration in food webs

26 Dec. 2017

Consider the following statement: "a computational device integrates diverse signals from its environment over time into a summary indication of the status of the device's surroundings". This could refer to a sophisticated system of digital sensors, or perhaps a recurrent neural network. However, it can also apply to a much wider array of physical phenomena if we treat them as indicators of the conditions in which they're embedded.

Steel and Bert (2011) argue that "mesofauna has multiple advantages over microbial communities as indicators for the quality and status of compost" (p. 47). One reason is that "by being one or two steps higher in the food chain, mesofauna serve as integrators of physical, chemical, and biological properties related to their food resources" (p. 47). We can think of compost animals like mites and worms as being complex computations that summarize a diverse array of environmental inputs, such as whether the compost has enough oxygen, has enough food, is not too acidic, has predators, etc.

Bitstring avoidance and pain avoidance

23 Dec. 2017

Imagine a computer program that monitors a chunk of bits on disk. It aims to ensure that the chunk of bits doesn't contain the pattern 01001101100101. If that pattern is detected, then the program drops all other tasks and seeks to change the bitstring to something else, such as by directly rewriting the bits or setting off other processes that will change the bits. The program also monitors other processes in an effort to prevent them from changing the bit pattern back to 01001101100101.

From the outside, such a program appears somewhat strange. "Why does it care so much about that particular bitstring?", we might ask. Yet in a sense, pain-avoiding animals like ourselves are similar to this program. Certain events can produce patterns of neural firing ("1"s and "0"s of neural activity) that we recognize and seek to "erase" from our nervous systems. Why are those particular patterns of nervous activity so important? It's because they're the patterns that we're wired to try to prevent. In a similar way, the 01001101100101 pattern is morally bad precisely because a (somewhat) sophisticated agent is trying to prevent it.

Feeling free to not finish tasks

21 Dec. 2017

When I was in high school, I was obsessive about finishing all of my homework to perfection, even if this meant saying up until 3 am. If a task was assigned, I had to finish it, no matter what, right?

Toward the end of high school, I overheard a conversation with some friends about one person's experience in college. He was asked what he did when he had too much homework to get done during his normal waking hours. His reply was something like this: "I just went to bed and didn't finish my homework. Retaining my mental clarity was more important." I found this astonishing at the time. However, I now think this is pretty good advice in most cases, except for really important tasks.

I usually let drives like wanting to sleep, wanting to exercise, wanting to get solid work done, etc. take precedence over what I'm currently in the middle of doing, even if it involves a conversation with someone else. Especially if the task you're in the mood for is hard, like exercising or doing focused work, then it's a waste to squander an opportunity when you are in the mood for that task. If I feel energized enough to do something difficult but am still wasting time on a lower-effort activity, I begin to feel an "I ate too much yummy (vegan) cake" sensation build up in my brain and feel guilty about wasting the mood.

Being able to optimize your activities based on your present mood is a luxury that's made possible when you don't have an externally imposed schedule. I find that if I do have external requirements regarding what I work on, then I end up in a lot of suboptimal situations in which I have to do X but would rather do Y at any given time. For example, if you work in an office, you usually have to wait until the workday is over to exercise, even if you're more in the mood to exercise right now.

Planned shower thoughts

13 Dec. 2017

Showering is the only time during an ordinary day when I have no external source of stimulation available but am alert enough to think about important topics. (When going to sleep, I try to avoid important thoughts, since they would keep me awake. At all other times I have either a screen to look at or an iPod to listen to.)

For this reason, I sometimes "save up" questions for myself for the shower, so that I don't waste "higher-quality" time on such thoughts. For example, in the shower I might reflect upon how I spend my time, big-picture strategy for reducing suffering, the nature of consciousness, or (sometimes) a math problem. These are topics that I can think about for a while without much external input. Of course, I'm often lazy in the shower and use the time to relax.

Sometimes people say they have their best thoughts in the shower. I disagree with this, because I think better when I can interact with a stimulus, such as a written text. In the shower it's easy for my mind to wander, and I'm not able to take in new information or write down my insights right away.

Disagreeing without explanation

29 Nov. 2017

Suppose Alice posts an article she wrote, and Bob leaves a quick comment: "I'm too busy to go into details, but I largely disagree with this post."

On the one hand, such "drive-by disagreements" are useful in that they provide nonzero Bayesian evidence, especially if Bob is epistemically trustworthy. On the other hand, unexplained disagreements can be frustrating, especially for Alice, because Alice has no direct way of settling the dispute or correcting possible errors in her view.

A reasonable compromise might be to expect that Bob either should give at least one sentence of quick explanation or should link to a source that roughly expresses his own view on the subject.

"Probabilities are not promises"

28 Nov. 2017

Sometimes I've had conversations that go roughly like this:

Person: I might or might not be available to talk tomorrow. I'll let you know.

Me: No problem. What's your probability that you will be able to talk tomorrow?

Person: Well, I don't want to promise anything.

Me: That's ok. Probability estimates are not promises.

Person: Ok, fine: 60%.

Sometimes people are reluctant to give probability estimates because they feel as though doing so would be a quasi-promise, insofar as it might get my hopes up about things working out. But it's useful to be able to exchange the information contained in a probability estimate without any implied commitment.

Todo-list "crumbs"

19 Nov. 2017

I sometimes hear people say that they focus too much on quick tasks on their todo lists because those tasks are "easy wins". My own mindset, perhaps trained by years of thinking about how I best get work done, is often the opposite. If I'm feeling motivated and not tired at a given time, I try to bite off a big chunk of work, get the hardest parts done, and then leave remaining easy details for later. This is great, except that, like eating big chunks of food, it leaves behind lots of little "crumbs"—tiny tasks that individually don't require much effort but that collectively can make for a messy dining-room table, or a messy todo list. Crumbs may also take the form of inherently small tasks, like replying to emails or making a trivial edit to an essay. Because I want to bite off big tasks when possible, I sometimes go several days without cleaning up many crumbs. Fortunately, there are some periods when I'm less motivated to eat big chunks and am more in the mood to collect my crumbs.

Small non-human carnivores eat a lot of animals

First written: ~2013; last update: 8 Nov. 2017

Some non-human predators that are smaller than humans eat more individual vertebrate animals, and sometimes even more pounds of meat, than humans do.

A typical omnivorous human eats a small fraction of a single animal each day, except when eating small fish or other small seafood. US per-capita meat consumption is about 271 pounds per year, less than a pound per day.

In contrast:

- "Adult Emperor Penguins consume 2-5 kg (4.4-11 lb) of food per day except at the start of the breeding season or when they are building up their body mass in preparation for molting. Then they eat as much as six kilograms (thirteen pounds) per day." (source) "Each penguin eats about one pound of fish per day." (source) "Each of the Zoo's penguins eat[s] 2 pounds of fish per day" (source)

- "If you do the math, you will find that one barn owl needs to eat about 79 pounds of mice a year." This amounts to about 3.5 individual mice per day. (source)

Of course, animals eaten by non-human predators aren't factory farmed, which makes a big difference. Still, the deaths of these prey animals are arguably worse than those in human slaughterhouses on average because there's not even a perfunctory attempt at stunning. Imagine being a fish swallowed live by a penguin. While human meat consumption causes lots of suffering, so does predation in nature.

Why can't humans fall asleep voluntarily?

8 Nov. 2017

Falling asleep takes time that could be used for other things. So why didn't humans evolve the ability to voluntarily put themselves to sleep? One might say that falling asleep, unlike moving muscles, isn't something a brain can "just decide to do". But humans can wake up voluntarily and very quickly.

One hypothesis is that if humans could sleep voluntarily in a similar way as they can hold their breath, they would use the ability as a simple form of "wireheading", to avoid painful emotional experiences like hunger or social rejection by sleeping them away rather than dealing with them. In other words, humans could avoid the "things that keep us up at night".

Another possibility is that voluntary sleep would allow for sleeping during the day, which would presumably be bad for diurnal animals.

I see that someone asked this same question on Quora, but none of the answers is useful.

Machine learning as memorization

5 Nov. 2017

When I worked at Microsoft, one of my colleagues described our machine-learning models as "memorizing" the training data. I found this to be an interesting perspective, because ordinarily, supervised learning is described as "building a predictive model" or something of that sort. But there is a sense in which supervised learning is fundamentally a kind of memorization, since it involves learning that "this input maps to this output", though doing so in a fuzzy enough way to allow for generalization. Of course, overfit machine-learning models are even closer to pure memorization of the training set.

The idea of learning as memorization can be applied to reinforcement learning too. In a simple, deterministic environment like Grid World, Q-learning will over time figure out what the best action is in a given state and then do that. The end of the process is just kind of a lookup table: "if I'm in this state, then take this action". Q-learning using neural networks isn't a pure lookup table of this sort, because you're rarely in the exact same state twice, but it's still like a fuzzy lookup table: "when I'm in this kind of general situation, then take this action". Of course, the underlying details of implementing Q-learning are more complex, but this simplistic description helps make the process less mysterious. In some sense, a Q-learning agent tries a bunch of actions, and through trial and error, it "memorizes" what action to take in each situation. (I'm not an expert on Q-learning, so I welcome corrections if this description is mistaken.)

Are simulation environments a limiting factor for real-world AI?

First written: 21 Oct. 2017; last update: 7 Jun. 2018

I don't think artificial-intelligence (AI) progress in board or video games portends much about "AI taking over the world" anytime soon, for a few reasons. A main reason is that the real world is both vastly more complex than game worlds and doesn't allow for enormous numbers of simulations of game play. An Atari-playing or Go-playing reinforcement learner can run through enormous numbers of rounds of the game at high speed in order to learn by trial and error. In most real-world settings, actions take place at the speed of the physical world, and rewards based on interactions with humans are bottlenecked by human input.

Irpan (2018) notes that many current deep reinforcement-learning systems require very large numbers of samples to achieve good performance: "learning a policy usually needs more samples than you think it will." Irpan (2018) continues: "There’s an obvious counterpoint here: what if we just ignore sample efficiency? There are several settings where it’s easy to generate experience. Games are a big example. But, for any setting where this isn’t true, [reinforcement learning] faces an uphill battle, and unfortunately, most real-world settings fall under this category."

Piekniewski (2018): "additional compute does not buy much without order of magnitude more data samples, which are in practice only available in simulated game environments."

In addition, many actions that a real-world reinforcement learner might try to take would be disastrous and get the AI shut down. For example, if an AI that was set to maximize the profits of a company tried out random actions like firing a bunch of employees or selling a bunch of assets, it would drive the company into the ground before it had completed a single "play of the game". In simulated games, recklessly trying random actions is no problem, because you can reset the simulation and try again.

So it seems that if an AI doesn't make extensive use of a priori reasoning, it must use simulations of the real world in order to allow for trial-and-error learning. And its performance will be limited by the accuracy of those simulations. Does this suggest that one significant obstacle to world-conquering AI is the availability of highly realistic world simulations? If so, how hard is it to build such simulations, and how computationally expensive would they be to run?

The Campaign AI of Total War: Rome II uses Monte-Carlo Tree Search, as well as some hand-crafted optimizations, to choose high-level strategies for literally taking over the world (Champandard 2014). (I haven't played the game and so could be misunderstanding a bit.) If the rules of the real world matched those of this video game, then presumably such an AI would be a formidable force. A main reason why this game AI doesn't take over the real world is that the real world's dynamics are vastly more complicated.

Of course, even if we had computationally fast, high-accuracy simulations of the real world, present-day AIs probably would still perform poorly because of the huge space of possible actions to take. There are probably lots of special tricks that humans use to compress the complexity of the real world and to locate good solutions more quickly. Still, I'm curious how much of the distance to world-conquering AI is due to limitations of AI algorithms vs. how much is due to limitations of world simulations. (Of course, improvements in the theory of world-simulation building can also directly contribute to improved algorithmic performance for model-based AI agents who themselves build miniature world models.)

Is building more realistic world simulations easily scalable? Or do you need lots of hand-crafted details specified by domain experts? Right now, the rules of video-game worlds are typically hand-crafted. The history of AI—in which learning from data usually eventually beats manual specification of rules—suggests that in the long run, simulation models of the world will probably be at least partly learned rather than specified directly.

Machine-learning work makes you appreciate errors

21 Oct. 2017

When I was learning to program in college, I was typically dismayed when I got errors in my programs, either when compiling or at runtime. One might think that it would be a relief to work on a project where you aren't constantly plagued by such errors. However, after doing machine learning a bit during college and then for a few years at Bing, I realized that hard errors are a blessing.

The problem with a lot of machine learning is that, except for literal code errors when running your program, errors are soft. You might mess up some configuration or feature, which results in degraded model performance, but there's no place in the code where the program stops and dumps out a stack trace. As a result, you have to go digging for the error yourself. If the etiology of the error is not obvious, you might spend many hours tweaking the machine-learning configuration to see what factor might be causing the poor performance, and training these new tweaked models might itself take hours or days. After lots of experience with soft machine-learning errors, I began to cherish the hard failures that are more prevalent in other realms of software engineering.

This idea of not having hard errors also applies to most non-software domains. Except for simple spell and grammar checking, I can't compile my writing to make sure it's completely syntactically valid, nor can I execute my words and see if they contain logical flaws. Most realms of reasoning don't involve hard errors, at least until scientific theories are empirically tested.

Importance and difficulty of good sleep

16 Oct. 2017

While I'm generally skeptical of silver bullets in the realm of productivity, there's one factor that makes an enormous difference to my mood, alertness, exercise endurance, and ability to process information easily: how much sleep I got last night. This point is probably abecedarian to most readers, but when I see people arguing about what the optimal amount of sleep is, as if this were a non-obvious open question, I have to wonder if they have the same biology as I do. My own experience is that more sleep is always better. Anything less than 9 hours of sleep is liable to reduce my mental functioning to some degree, while more than 10 hours almost guarantees a long day of high productivity.

I'm a bit puzzled by interest in nootropics, meditation, diet, etc. as ways to improve productivity, when it seems to me that priorities #1, #2, and #3 should be sleep, sleep, and sleep. While I don't have great solutions for better sleep, I've found that long periods of exercise (ideally 1.5 hours) during the day tend to allow me to sleep for long periods at night, while getting fewer than 30 minutes of exercise often sets me up for poor, short sleep. The downside of this fact is that, under ideal circumstances, exercising and sleeping combined take up half of the day, but the result is that I feel great during the remaining time.

Unfortunately, I often don't sleep fully, perhaps because I wake up in the night and can't get back to bed, or because I simply stop feeling tired after only 7 or 8 hours of sleep. On these days, I'm more likely to do work that requires less mental focus, such as small chores, replying to emails, and so on. On days when I get better sleep, I can do higher-value tasks that require mental clarity, like writing, fact-checking, and proofreading.

Prison violence is "cruel and unusual punishment"

16 Oct. 2017

The US Constitution's prohibition on "cruel and unusual punishments" is one of its most admirable features. Unfortunately, this ideal fails to be upheld in most actual US prisons, for a variety of reasons.

Prisoners, regarded as dregs of society, typically have poor living conditions and inadequate food. Prison guards are often indifferent or cruel, since nice people are unlikely to want to work in prisons.

And then there's violence committed by other prisoners. If society had the motivation to do so, we could significantly reduce such violence, but many people don't care about prisoner welfare, and some people may delight in prison violence because it's a way to sneak severe punishment into the prison system without any official government policy of violence.

In addition to reducing prison violence through better monitoring and enforcement, one obvious way to address this problem is to put fewer people in prison in the first place, including large numbers of non-violent drug offenders.

Multicellular organisms are water reservoirs

First written: 14 Oct. 2017; last update: 17 Dec. 2017

One trend I've noticed from observing invertebrates in my yard is that detritivorous invertebrates congregate in moist areas. That's because organic matter doesn't really break down until it gets wet. Why is that? My assumption is it's because bacteria generally require water in order to be active. I have the impression that bacteria are fundamentally like aquatic organisms, in that they swim around in liquid in order to perform their functions. The low-level biology of enzymes, RNA translation, and so on are all processes that occur within water and use diffusion(?) to move stuff around.

University of Illinois Extension (n.d.) says regarding backyard composting: "Decomposer organisms need water to live. Microbial activity occurs most rapidly in thin water films on the surface of organic materials. Microorganisms can only utilize organic molecules that are dissolved in water."

How is it, then, that plants, animals, and fungi can live on land without being submerged in water? The answer, it seems, is that they carry the water with them. Most organisms are mostly made of water. Multicellular organisms are essentially pools of water that can move around on land. By having such high water content, eukaryotic cells can perform the functions of life in solution the way bacteria do.

On criticizing other cultures

11 Oct. 2017

A typical city-dwelling, college-educated liberal is likely to agree with statements like this: "Rural white Southerners who take the Bible literally and think women have a duty to be housewives are unenlightened, and their cultural values are distasteful." Meanwhile, the same liberal would likely express tolerance toward rural indigenous peoples from Australia who took their religion very seriously and maintained distinct gender roles. This liberal would be quite offended if someone were to describe the indigenous Australian culture using the same terminology often applied to white Southern culture.

There's probably a good sociological reason for this double standard. Historically humans have been inclined to demonize people from other cultures, sometimes resulting in ghastly atrocities. Since acts of violence are more likely against foreign, minority cultures than against politically powerful American subcultures, it's more important to guard against racism against cultures that are more unfamiliar to most Americans. And since racism is such a strong instinct, we need to avoid any thoughts that might incline in that direction, including comparisons of which cultures are "better" or "worse" than others—unless those cultures are "American", in which case it's more acceptable to bash them. I suppose this trend extends generally, with it being more acceptable for a person of type X to critique a culture associated with other people of type X than it is for an outsider to do so.

When I was in school, there was such a strong effort to promote tolerance for other cultures and to challenge ethnocentrist tendencies that students were effectively taught something like: "Native Americans = good, Europeans = bad". It's obvious to everyone that this is too simplistic, but many liberals might be surprised to discover just how brutal some pre-European North American cultures were. (In contrast, it's well known that European cultures of centuries past were often barbaric.) For example, Iroquois warriors tortured their captives in horrific ways.

While I generally side with liberals on social issues, I appreciate the fact that conservatives are often more willing to admit that other cultures have shitty parts to them, just like our own culture has shitty parts to it. Of course, promoting this view as an unqualified mass message is risky because of its tendency to increase racism and xenophobia. Presumably the best solution is to try to change other cultures slowly and indirectly through education and spreading Enlightenment-style values.

And of course the cultural relativists are right in some sense. There is no objective moral truth, and evaluating good vs. bad parts of various cultures is ultimately just my opinion. But my opinion doesn't have to be one of tolerance toward anything as long as it's non-Western.

Evolving to eat plastic

5 Oct. 2017

Recently I was pondering the following question: if humans can create non-biodegradable organic compounds like plastics, why doesn't nature happen to produce non-biodegradable compounds too? Why is it that basically everything that life produces is biodegradable?

Today I learned that some organisms can eat plastic, including Pestalotiopsis microspora and Ideonella sakaiensis. So maybe it's just a matter of time until evolution discovers how to efficiently biodegrade many supposedly non-biodegradable compounds?

A possible test to distinguish pleasure vs. cravings

First written: 4 Oct 2017; last update: 8 Sep 2018

It's often claimed that happiness can outweigh suffering because people would be willing to endure some (possibly quite intense) suffering in exchange for big rewards. In this video I point out something that others have noted as well: trades to accept some suffering in return for later pleasure may sometimes be motivated by avoiding the pain of cravings rather than because pleasure has positive value.

Following is a simple and perhaps obvious test of this point. Imagine some pleasure that you would find immensely rewarding, such as falling in love, sex with the most charming and attractive partner you can imagine, winning a Nobel Prize, and so on. Consider an intense bout of suffering, such as breaking your arm or undergoing a painful medical procedure. Assuming no lasting physical or mental damage from the pain, do you feel like that amount of pain would be an acceptable cost in exchange for the future reward? I sometimes feel as though this would be the case. If it's not the case for you, try adjusting the pain and pleasure levels until the tradeoff does seem worth it.

Normally the thought experiment ends here. But we should check whether the tradeoff being accepted is driven by the positive value of the reward or by unpleasant cravings. To do this, imagine that there's a button you could press that would remove any cravings you have for the immense reward; instead, you would feel peaceful contentment with your present state. For example, if you're imagining the reward of eating chocolate cake, pressing the button would transform you into a state where you're not hungry, don't crave sugar, and you're instead just sitting quietly without any bothersome mental states.

If this craving-elimination button is available to you to press, are you still willing to make the tradeoff to accept some severe suffering in return for the reward? Personally I find that when adding such a button to the thought experiment, my inclination to accept suffering in return for the reward is significantly reduced. While it feels subjectively to me like the value of the hypothetical reward is driven by pleasure, when I imagine having the option to immediately end my desire for that pleasure, I would often be happy to do so, because ending the desire is a more instantaneous and less costly way to achieve the goal of returning to a contented state. Even a hypothetical infinite reward doesn't feel so compelling if I imagine having a button that would allow me to not crave the infinite reward and instead be content with what I have.

I'd be interested to hear whether and how much the addition of this craving-elimination button to thought experiments would affect other people's judgments about pain-pleasure tradeoffs.

Alexander Davis argued to me: "I think that the peaceful contentment mentioned is a kind of happiness in itself rather than neutrality" (email conversation, 2018 Sep 8, quoted with permission). Yes, one could maintain that a non-bothersome mental state is actually positive in hedonic value rather than neutral. However, it still runs counter to common assumptions to suggest that mere contentment is about equally valuable per unit time as intense pleasure or once-in-a-lifetime excitement, such as the feeling of winning a Nobel Prize.

We could try to overcome the objection that mere contentment already counts as pleasure by amending the thought experiment to talk about adding years to your life. Would you accept some intense pain for lots of extra years of pleasure/satisfaction, or would you press a button that takes away any cravings you might have for that pleasure and leaves your life to end on its normal schedule? We could assume that the extra years of life would be inserted right now so that you wouldn't have to wait. Also assume that the extra years of life wouldn't allow you to accomplish additional altruistic work, since if they would, then you might be morally obligated to accept the suffering in order to accomplish more altruistic work. Relative to this thought experiment, I would gladly let my life end on schedule rather than extending it with additional blissful years that would come at the cost of a short duration of extremely intense pain. Other people may have different intuitions.

Another possible objection to my thought experiment could be that if we have a button that removes cravings (desires for good things), we could also imagine a button that removes fears (desires against bad things). If someone presses a button that removed fears, that person might then be more willing to accept suffering in return for rewards. That's true, but at least in my case, I wouldn't press a button to remove my fears if it meant I would later accept terrible future suffering. In contrast, I would press a button to remove my cravings even if it means I then don't care about wonderful future happiness.

Cryonics

1 Oct. 2017

I'm occasionally asked for my views on cryonics. I haven't studied the topic in any detail, but here are a few general points. There have been many LessWrong discussions on the pros and cons of cryonics that go into much more depth.

From the perspective of saving human lives, cryonics seems less efficient than donating to developing-world health charities, unless you take the view that "saving lives" through non-cryonics methods only amounts to delaying death by a few decades, while cryonics offers the possibility of reviving a person who might then live for billions of years. Still, depending on your views about when mind uploading will become possible, saving the lives of children today might also allow more people to potentially be uploaded, and saving the life of a present-day child is cheaper than cryonically preserving one present-day person.

In addition, I don't see much moral difference between preserving existing people vs. creating new ones, except insofar as death violates the preferences of existing people, while merely possible people have no preferences to violate unless such people eventually do exist.

Viewed as a selfish luxury for oneself and close relatives, cryonics is cheaper than some other luxuries that people spend money on, such as having children, traveling frequently, or failing to take a higher-paying job. From that perspective, cryonics could make sense.

What about viewing cryonics as a form of life extension so that you can continue to have an altruistic impact for a long time to come? (Thanks to a friend for inspiring this question.) I'm skeptical of the return on investment here compared against achieving "immortality" via spreading ones values and ideas to other people through one's writings, movement-building efforts, etc. There's only a small chance that you'll be cryonically restored, and by the time you would be, society may have moved beyond the point where your cognitive abilities would be competitive, so it's doubtful you'd have much influence within the society that restored you, except maybe as a historical curiosity. Even if you'd be certain to be restored and would be able to meaningfully participate in future society, it's not clear that the altruistic payoff from preserving yourself would exceed the payoff from merely investing the money you would have spent on cryopreservation in the stock market and achieving compounding returns thereby. (There might be exceptions to this argument if you're a particularly special person, like Elon Musk.)

Personally, I wouldn't sign up for cryonics even if it were free because I don't care much about the possible future pleasure I could experience by living longer, but I would be concerned about possible future suffering. For example, consider that all kinds of future civilizations might want to revive you for scientific purposes, such as to study the brains and behavior of past humans. (On the other hand, maybe humanity's mountains of digital text, audio, and video data would more than suffice for this purpose?) So there's a decent chance you would end up revived as a lab rat rather than a functional member of a posthuman society. Even if you were restored into posthuman society, such a society might be oppressive or otherwise dystopian.

Motivation vs. "raw intelligence"

21 Sep. 2017

Given that I was valedictorian of my high-school class, you might expect that I was "quick-witted". This was often not the case. When we did math problems or reading assignments in class, I often struggled to keep up with my peers, who seemed to be able to read and work through material much faster than I could. Some students handed in their tests early, while I often scrambled to finish tests before the end of the period. My slowness probably improved my accuracy, but even keeping accuracy constant, it's plausible I would have been slower than many of my classmates. So why did I do so well?

The main answer is: hard work. I did studying and homework during almost all my waking hours, often even while eating dinner, and during most of the weekend. This "tortoise" approach cumulatively beat the "hare" approach that many of my classmates used to complete their schoolwork. (Some of my classmates did much of their homework during other classes or during lunch.)

I think the tortoise approach usually beats the hare approach. Most of the time, when someone is "smart" in some field, it's because that person has spent a large number of hours learning about that field. For example, people who are "good at math" tend to enjoy math and so have spent much more time practicing math then those who claim to be bad at it.

Marriage name changes

13 Sep. 2017

I personally don't understand the appeal of changing one's name as a result of marriage. It seems to me like a lot of work and confusion for little benefit. I imagine that the change involves at least hours of paperwork, changing one's name on various online accounts, updating friends about the change, etc. The situation is far worse if you're an academic whose citation counts will get messed up, or if you're a public figure whose old mentions in news articles won't necessarily show up when people Google your new name. Maybe one could make the case that the high cost of a name change is part of the point—a name change signals one's commitment. But there would seem to be less wasteful ways to signal commitment.

If one spouse doesn't change his/her last name, there remains the issue of what last name the children should have. This could be determined by a coin flip or by strategically assessing which last name sounds better. Or maybe the kids could get a new last name entirely?

Sometimes kids get a joint last name that combines the last names of the parents. This is nice in spirit but a nuisance in practice. One person writes:

My married surname has a hyphen in it. (It came that way; it's all his, and all one unit, it just happens to be spelled with a hyphen.) It is a MAJOR PAIN IN THE ARSE. Yes, I did mean to shout.

Yesterday the AAA lady chose the second half to address me by (after I had clearly enunciated and spelled the whole thing). The HVAC guy chose the first half, again after full and detailed introductions. People take that d***d hyphen as license to ignore half the name, rearrange its parts, or otherwise mangle it freely. Computers are even worse, because half the time they ignore the hyphen, and the other half the time they tell me it's invalid. It's especially fun when traveling, when they tell you in red unfriendly letters that your input must match your travel documents exactly, but they don't accept a hyphen as input.

Double last names are also unsustainable. If the current generation has two last names, then their children must have four, their children must have eight, and so on.

Pretending that work is play

10 Sep. 2017

In Calvin and Hobbes from 28 Aug. 1989, Calvin says: "It's only work if somebody makes you do it." I would add that tasks can also feel like work if you make yourself do them out of duty rather than because they're fun. This suggests a strategy for making tasks more fun, which I find is pretty successful in my case: pretend that the "chore" that you're doing is actually a leisure activity that you're doing as a form of "junk food". This makes the task less stressful and makes you feel somewhat as if you're procrastinating from actual work by doing the "fun" task (even if the fun task is your actual work).

I find that doing things because you force yourself to isn't very sustainable, and when people excel at some project, it's usually because they derive significant pleasure from that project. Hence, making one's work feel more like a fun hobby can be valuable. I think this is one domain where there may be some truth to the motivational-poster slogan that "It’s All About Attitude".

IQ reductions generally worse for altruists than egoists

7 Sep. 2017

There's a question to the effect of: would you add X points to your IQ if it also meant adding X pounds to your weight? I think the obvious answer is "yes" for almost any positive X. But wouldn't adding, say, 15 pounds to your weight reduce your life expectancy? Yes, but probably by no more than a year or two, and there wouldn't be severe quality-of-life impacts in the meantime. In contrast, an improved IQ would benefit my life for all of my remaining years. (There are some studies linking obesity to lower IQ. I haven't looked into the details, but I'm intuitively skeptical that even if there is causation from obesity to lower IQ that the effect is that big.)

In general, I tend to be more paranoid about health risks that impact cognitive function, such as lead, than about other health risks, such as those that cause cancer. Many health risks mainly take a few days, weeks, or months off your life expectancy, but reductions in brain functioning—as well as severe disabilities like blindness or deafness—impair performance for all of your remaining years.

Purely from the standpoint of maximizing personal happiness, modest IQ reductions may not seem particularly severe. However, from an altruistic standpoint, reduced cognitive performance could non-trivially deflate the quality of one's work. Thus, it's plausible a priori that from the perspective of an altruist whose cognitive functioning is important, population-wide risk guidelines for IQ-lowering substances are too lax relative to risk guidelines for life-shortening substances, though I have no knowledge of whether this is actually true in practice.

I'm uncertain how much IQ actually matters for most altruistic work. A lot of what I do is high-level conceptual thinking that doesn't obviously exercise the same kinds of skills that IQ tests prototypically measure. Still, if I approximate what it's like to have a lower IQ by recalling how I perform when I'm tired, then the reduction in performance does seem non-trivial even for "soft" skills and not just for careful logical reasoning.

My cartoon understanding of Hebbian learning

1 Sep. 2017

Recently I've been learning about how long-term potentiation (LTP) works, and I feel like I'm finally beginning to understand Hebbian learning, at least at a cartoon level. (I'm not an expert, and what I say here may be wrong.)

It's often said that "neurons that fire together wire together", but how does that help explain learning? This becomes more clear if we picture a setup in which two different neurons are both inputs to an output neuron. For example, suppose neuron A represents the sound of a bell, neuron B represents food, and neuron C triggers salivation. The neurons are hooked up as follows:

A --(weak synapse)--> C

B --(strong synapse)--> C

Before training, B's firing triggers C's firing unconditionally. If a bell is also rung at the same time as food is presented, then A, like B, will fire just before C. This firing together will strengthen the wiring together of A and C. Eventually, the A --> C synapse will be strong enough that the bell sound can trigger salivation on its own. B acts sort of like training wheels, ensuring that C fires at the right time in order to assist LTP between A and C.

I wonder if a similar explanation gives insight into how conscious practice builds unconscious skills. For example, suppose you're playing the piano, and one note (represented by neuron C) is supposed to follow another note (represented by neuron A) in the song. How do you hook up A to C? You can use high-level deliberate attention to press the first piano key (triggering neuron A) and then press the second piano key (triggering neuron C). Because C fires shortly after A, the synapse from A to C gets strengthened. After practicing this enough times, the synapse from A to C is strong enough that you don't need external attention to stimulate the two neurons in succession, because A can stimulate C on its own. At this point, the skill has become "automatic". (I'm just making up this explanation based on what little I know about these topics. Probably many neuroscientists have actually written about this issue.)

Being surprised by interlocutors in dreams

31 Aug. 2017

Sometimes during dreaming, I have a conversation with another person in which the other person replies to me in a way that I find surprising, insightful, or otherwise unexpected. This is a curious fact, because my brain generated the other person's reply, and we're usually not surprised by our own insights (although sometimes we may be).

I know little about the neuroscience of dreams, but one speculation about how dream conversations work is as follows. In general, my brain generates verbal statements in an "unconscious" process of stitching ideas together, and once the inner words are finalized, a higher-level schema in my brain attributes those words to a mental representation of "myself". During dreams with another person, the same unconscious word-generation process occurs, but the words are attributed to the other person.

Still, my "surprise detectors" would know about my brain's sentence generation in either case, right? So why am I more surprised when the other person says something than when I say it? One extremely speculative idea is that the attribution of the words to oneself prevents the surprise reaction, since otherwise we'd be constantly surprised by our own thoughts?

At first I thought the explanation could be similar to why you can't tickle yourself:

the cerebellum can predict sensations when your own movement causes them but not when someone else does. When you try to tickle yourself, the cerebellum predicts the sensation and this prediction is used to cancel the response of other brain areas to the tickle.

In analogy, the language-generation parts of the brain predict what I'll say, and this cancels the response of my language-listening brain regions when I hear those words. Since we can't predict what other people will say, we can be surprised by it. However, during a dream, the "other person" is generating language using my own left hemisphere, which should seemingly cancel out any surprise.

On banning white supremacists

31 Aug. 2017

Following the 2017 Charlottesville rally by white supremacists and neo-Nazis, some white supremacists were banned from college, from OkCupid, and so on. Technically this doesn't violate the First Amendment to the US Constitution because these are private organizations, but in my opinion, banning people from broad organizations like a college based on ideology alone—rather than because they're engaging in harassment or violence—is a generalized form of free-speech violation. These broad types of institutions should treat people based on their actions, not their beliefs. (I would support exceptions to this rule for, e.g., police officers, judges, and others who hold significant power over others' lives and are expected to serve the community.)

I'm a consequentialist, and ultimately the analysis of whether it's better all things considered to violate the free speech of white supremacists is an empirical question that would require extensive analysis to answer. However, insofar as I have deontological intuitions, the principle of free speech (both by the US government and more generally in other parts of society) is near the top of my list of strongly felt convictions. I think it's rational to be much more cautious about being willing to violate free speech than being willing to make more ordinary policy decisions, because freedom of speech is a meta-level principle within which more direct policies can be debated in a free society. That's why Constitutional amendments are harder to pass than ordinary laws. It's similar to being more cautious about updates to an operating system's kernel than about installing a random new software application on that operating system.

I like Noam Chomsky's famous quote that "If we don't believe in freedom of expression for people we despise, we don't believe in it at all." The idea that it's ok to ban, intimidate, or even punch neo-Nazis "because they're bad and I can get away with it because they're in the minority" is the same logic that the Nazis themselves used. Of course, the actions of the Third Reich were far more severe than, say, the actions of Antifa, and drawing parallels here could be seen as diminishing the horror of what the original Nazis did. But I still think we should be better than the other side by respecting their rights in cases where they wouldn't have respected the rights of others.

Perhaps part of the reason I care so much about free speech is that my own views are sometimes very far outside the mainstream, which helps me understand what it feels like to hold a minority viewpoint. Of course, in many ways my views are on the other side of Nazis, in that I believe that more total beings deserve moral consideration than mainstream morality does, while Nazis believe that fewer beings deserve moral consideration than the mainstream does.

My significant differences of opinion from the mainstream also give me a broader perspective. For example, I think the practice of eating meat, which most people in the contemporary West do, can be seen from certain perspectives as a grave moral atrocity that, in another age, might be seen as grounds for banning or even imprisoning people. For example, a typical American omnivore brings 23.7 chickens into short, miserable lives that end with agonizing deaths every year. Unlike white supremacists who merely say horrible things (with potentially harmful long-run consequences), omnivores more immediately cause horrific experiences to innocent beings in the present. And of course, it's not just omnivores who cause terrible suffering to innocent animals; we all do to some extent through plant farming, roadkill, and so on.

Some of the same individuals who declare that white supremacists are terrible people also sometimes say things like, "It's ok to eat meat because humans are superior to animals." I agree that we should call out white supremacy through verbal arguments (but not coercive actions), especially to avoid backsliding on the progress we've made toward racial equality. But a somewhat more distant perspective on the broader spectrum of possible moral views is at least humbling and may help to avoid the hubris of thinking "We've got it all figured out in the department of what types of beings deserve moral consideration. Nazis bad, us good, end of story."

Non-trivial instantaneous learning is science fiction

23 Aug. 2017

Many years ago, I browsed a book about learning that said something like this: a few decades from now we might have drugs(?) that will instantly rewire your brain to teach you new things, without your having to learn the material the usual way. At the time I didn't know much about the brain, so I assumed this might be possible. Now I realize that this is basically bullshit.

Learning produces changes in the physical state of the brain, especially synapse connectivity. In principle, a sufficiently advanced neuroscientist could "diff" a brain before and after learning something and see what updates would need to be made to transform the initial brain into the final one. So the idea of instantaneous learning is not physically impossible. But in practice, I expect that it would be extraordinarily hard to pull off. Perhaps you'd have to deploy nanobots to navigate the brain, identify just the right synapses, and adjust them in just the right ways, and do this for millions/billions/trillions of synapses. And each person's brain is somewhat different, so the required synapse updates might differ on a case-by-case basis. Maybe there are crude generalizations that could be done at a high level to accomplish some very basic synaptic updates, but this would be nothing like encoding the kind of neural detail required to teach students about world history or fine art. By far the easiest way to achieve the exact right kind of neural updates is to teach people the old, boring way.

In principle it might be slightly easier to implement instantaneous learning for uploaded human brains, since you wouldn't need nanobots in order to change synapses. But still, the required updates would be so detailed and specific to the individual that applying such updates still seems hopeless to me.

Here's an example of this point. Suppose Alice has a Windows computer that's running various programs, and she has a web page open that's computing a JavaScript animation. Bob has a Linux computer and would like to open the same web page and run the same animation to the same stage of the animation as Alice's computer is currently at. One way to do this is to dump all the data about the system configuration, installed programs, cache and RAM contents, etc. from Alice's computer, and try to figure out what updates to make to Bob's computer that will replicate the web page and the current stage of the animation. We would need to know what data to put in Bob's RAM, what program state to change on Bob's computer, etc. Alternatively, we can just have Bob run his browser, open the relevant web page using the in-built browsing software, and let the animation run to the desired stage. This latter method is comparable to learning information the old-fashioned way.

Everything is kind of a simulation

23 Aug. 2017

Like many concepts, "simulation" is a concept that comes in degrees without hard edges. Ordinarily when someone says "simulation" we might think of The Matrix, or at least the kinds of computations that a graduate student runs on a fast computer in order to model the evolution of a physical or social system. However, we can imagine gradually removing detail from these simulations until they become simpler and simpler. A very barebones simulation might model just a few variables evolving according to super simple update rules.

Even ordinary "thoughts" are crude simulations, in that the thinker reasons from certain premises ("initial conditions") to conclusions ("final states"). These computations help make predictions in a similar way as time-evolution simulations do. For example, you might find the minimum of the curve x2 - 2x + 3 using calculus, without guessing and checking any x values, but you would still be making a prediction about the system in question by reasoning from input information to output conclusions. This kind of reasoning is functionally comparable to what we ordinarily consider simulation for the purposes of accomplishing your goals and so on.

How about a grain of sand sinking to the bottom of a pond? What does that simulate? Among other things, it simulates itself, i.e., it simulates how a grain of sand sinks. :) It also simulates various equations of physics that are playing out in the situation. And given other interpretations of the physical system, this action may simulate other things as well.

Why I'm wary about multiple-choice questions

20 Aug. 2017

Who Wants to Be a Millionaire? and You Don't Know Jack are famous quiz games that use a multiple-choice format for asking questions. Many school tests and self-quizzes also have multiple choices because doing so makes answers particularly easy to grade.

While such quizzes can be fun, I'm nervous about them as a way to learn information because I worry that my brain might sometimes fail to distinguish the right answers from the wrong answers, causing me to "learn" all the answers to some degree. Most of one's time is spent thinking about the plausibility of each option, and quickly revealing the correct answer at the end seems unlikely to "undo" all the Hebbian work of associating the question with each answer that happened up to that point. I haven't looked into studies about this concern, so I don't know how seriously to take it.

I'm wary of highly realistic fiction for the same reason—it's often hard to distinguish what's real vs. what's a fictional embellishment. As a result, you either can't learn anything from the fictional work or risk learning some falsehoods. This was my big problem with the 2008 film Recount. This issue is also responsible for the CSI effect.

On not getting depressed by the world's suffering

16 Aug. 2017

In my moral outlook, sufficiently intense suffering, such as the worst forms of torture, are so serious that they make other values comparatively trivial. For instance, the beauty of a sunset or the excitement of a new discovery can't compare in moral gravity to, e.g., being boiled alive. This outlook implies that the world is and always will be net negative in moral value.

I'm sometimes asked how I avoid feeling depressed by this. Well, I am sometimes very upset about the extreme suffering in the world, but for better or worse, these feelings die down over time due to emotional adaptation, and I revert back to a more "normal" mood. I think remembering the awfulness of suffering from time to time is important in order to keep one's moral compass calibrated, but on most normal days my attention and mood are focused on more mundane or intellectual topics (Tomasik 2017).

I'm lucky that I seem to have always had an attitude toward the world's problems that aligns well with the Serenity Prayer. I tend to take the way things are as a baseline and focus on what changes I can make relative to that baseline. This mental attitude came naturally to me, so I unfortunately don't have advice on how to cultivate it.

I would feel guilty if I didn't do much to reduce suffering, but because I do devote a decent fraction of my resources toward altruism, I feel satisfied with myself (even though I don't do nearly as much as a less flawed version of myself could).

Peak shift and hair length

5 Jul. 2017

Note: I'm not an expert on the topics discussed here. This post is fun speculation on ideas I don't have time to learn about in detail.

Why do women typically wear their hair longer than men? Fabry (2016) quotes Kurt Stenn as saying "[It is] almost universally culturally found that women have longer hair than men."

One possible explanation is that long hair signals good physical health, which is more important for the childbearing partner in a family. Fabry (2016):

Stenn, a former professor of pathology and dermatology at Yale who was also the director of skin biology at Johnson & Johnson, believes that health is perhaps the root cause of hair's significance. "In order to have long hair you have to be healthy," he says. "You have to eat well, have no diseases, no infectious organisms, you have to have good rest and exercise."

However, another possibility is that hair length is merely an arbitrary and overt way to exaggerate sexual dimorphism. My understanding of sexual dimorphism was enhanced recently by discovering the phenomenon of peak shift: "In the peak shift effect, animals sometimes respond more strongly to exaggerated versions of the training stimuli. For instance, a rat is trained to discriminate a square from a rectangle by being rewarded for recognizing the rectangle. The rat will respond more frequently to the object for which it is being rewarded to the point that a rat will respond to a rectangle that is longer and more narrow with a higher frequency than the original with which it was trained." Likewise, we might imagine that at some point in the past, longer hair was a weak signal of femininity, and once learned, women could deliberately grow their hair longer and longer to exaggerate their gender. (Or maybe men started cutting their hair shorter and shorter. Or both.)

Perry et al. (2013) give a cartoon sketch of how peak shift might work at a neural level in Figure 3(c). Applied to mate selection, the cartoon sketch might go as follows. A person takes in stimuli (including visual stimuli) about another person. These stimuli go through layers of neural-network processing and reach "extrinsic neurons", which "output to premotor areas and are important in organizing the behavioral response" (p. 571). For example, these extrinsic neurons might indicate whether to treat the other person as a potential mate or not. If both men and women look very similar, then similar inputs are fed into neural networks, so similar output extrinsic neurons fire, which makes it hard to decide which people are potential mates and which aren't. But some subset of extrinsic neurons will, due to statistical trends in the population, tend to fire more for members of the opposite sex than members of the same sex. So a new person whose traits are more exaggerated as opposite-sex traits will tend to fire more of the opposite-sex-specific extrinsic neurons, with fewer of the inhibitory same-sex extrinsic neurons, causing a stronger net response.

Another cartoon sketch of peak shift can be done if we imagine a "potential-mate classifier", which we could simplistically take to be a linear model. For example, suppose that men initially have this classifier:

potential-mate-ness score = 0.51 * trait1 - 0.18 * trait2 + ... + 0.05 * hair_length.

Because there's a positive (if small) 0.05 weight attached to hair length, women can slightly increase their scores as potential mates by growing hair longer (which is peak shift). Once this practice becomes commonplace, hair length becomes a better signal than it was before of femininity, so the new classifier gives it more weight, e.g.:

potential-mate-ness score = 0.47 * trait1 - 0.19 * trait2 + ... + 0.5 * hair_length.

Peak shift is a phenomenon of learning. It's possible that some parts of sexual attraction are genetically hard-coded, but presumably a good deal of sexual attraction is learned during sexual imprinting, and peak shift could apply there. For instance, a child can learn to distinguish its father from its mother, and then peak shift might favor exaggerated versions of the mother's traits (for a boy) or father's traits (for a girl).

Should goals always be open to revision?

29 Mar. 2017

Evan O'Leary asked me about the idea that a fundamental principle of ethics is that we should keep open our ability to improve our moral views, because our current views may be suboptimal (relative to some meta-level criterion, such as what we would endorse upon learning more).

I would say there are two conflicting imperatives, and we need to trade off among them. One imperative is to make updates to our values using update procedures that we approve of. The other imperative is to prevent corruption of our values in ways that we don't like. Leaving values open to change allows for both of these, and it's a messy empirical question what the balance of benefits vs. risks is for what kinds of brain updates in a given situation.

As an analogy, one might say it's a fundamental principle of using a personal computer that we should keep open our ability to connect to the Internet. This is often true when we want to browse the web, download applications, etc. But being Internet-connected also allows for computer viruses and other malware. Sometimes not connecting a computer to the Internet is better for security reasons. Moreover, some people may prefer to avoid connecting to the Internet for a period of time to avoid distractions and temptations (which are analogous to unwanted moral goal drift).

When OCD is rational

16 Mar. 2017

In the late 1990s, I watched As Good as It Gets with my family. This was my first introduction to obsessive-compulsive disorder (OCD), and I noticed that I could weakly identify with a few of the main character's tendencies, such as wanting to step over sidewalk cracks. (In my case, that crack-skipping behavior may have been partly playful and partly due to a hard-to-verbalize desire for orderliness or something.)

Today, I no longer notice sidewalk cracks, although I do watch my footsteps in order to avoid painfully killing bugs under my feet. My checking for bugs can appear like mild OCD to onlookers, but I think this behavior is fairly rational given my belief that bug suffering is important and bad.

I'm somewhat OCD when checking important things, such as verifying that the stove is off before I go to bed, or checking that I've recovered all my belongings after going through airport security. I feel these behaviors are fairly rational, which is why I maintain them. I sometimes do rough Fermi estimates of the expected cost of failing to check carefully, and these estimates usually suggest that my checking behavior is worth its cost. If this isn't the case, I tend to stop obsessing so much. (That said, it's tough to get precise answers with Fermi calculations, and I may have a tendency to overestimate risks in order to be on the safe side.)

In my experience, OCD checking behaviors are a response to carelessness and automaticity. If I don't check something carefully, I lose track of it from time to time. For example, I sometimes unthinkingly put down my iPod in a weird location and then can't immediately find it. I've occasionally been similarly careless in more important contexts, such as when I forgot a key and got locked out of my building until I was able to get help. After this experience of getting locked out, I've become more "OCD" about checking that I have a key before I leave a locked building.

The OCD feeling involves a small degree of "anxiety", which I find is necessary in order to jolt my brain out of complacency and actually pay attention to rote tasks. It would be very easy to, say, glance briefly at my wallet and assume I saw my house key, even though I had actually forgotten it. Being OCD helps force me to devote my full attention to whether I actually have my house key.

OCD can also prompt you to go back and check something over again. I do this sometimes, such as when checking the stove before bed, if I've forgotten whether I actually checked the stove tonight or whether I'm only remembering checking it on prior nights and didn't actually check it this night. When you do a task routinely, different memories of it can blur together, which makes it hard to be sure if you've actually done it on this occasion or not. Memory is unreliable.

Subjectively, when I check something routinely, like that I have my house key before leaving the house, I feel something like semantic satiation. I've already checked for my key a thousand times in the past, so when checking again today, it feels like I can't notice things to the same degree I would if this were my first time checking. I need a "jolt" out of this daze in order to pay attention. A similar phenomenon applies to checking for grammatical errors in an essay that you've already read through many times.

Finally, the OCD practice of counting can be a useful way to check that you have everything without needing to remember a whole list of what you need. For example, at the airport, I might remember that I need my backpack, my passport, and a few other items. Say it's five items total. Then you can just count your items (perhaps twice to be sure) rather than remembering each specific item you need. (One of the many reasons why I dislike travel is the stress required for me to pay attention so as to avoid losing things, missing my flight, etc.)

Note: By discussing my very mild cases of OCD-like behavior, I don't intend to downplay the suffering caused by less trivial instances of OCD.

Using novelty detection when researching

3 Mar. 2017

Recently I was trying to revise an article (call it "A") that I had written years ago by adding important information from a piece by another author (call it "B") that wasn't already in A. There are two ways to do this:

- Read A first (to refresh my memory of what I had written), then read B and notice what information in B wasn't already in A.

- Read B first, then read A, and try to remember what information in B wasn't also mentioned in A.

Cognitively, strategy #1 seems easier and more accurate. All you need to do in that case is build a mental "hash table" of the contents of A and then for each fact in B, check whether that fact is in the hash table. This strategy relies on the brain's "novelty detection" abilities to notice information from B that's "new" relative to what the brain remembers from reading A.

Strategy #2 is harder. You need to build a mental model of B, and then while reading A, you need to mentally "cross out" the facts from B that are already in A, and then go back through B and see what facts aren't crossed out. This requires storing comprehensive representation of article B.

Here's an analogy. Suppose A and B aren't articles with facts but are lists of 50-digit random numbers.

- To do strategy 1, you could read through list A and just store, say, the first 5 digits of each number you see, which should most of the time be sufficient to uniquely check whether you already saw a given number. Then when reading through B, you check whether the first 5 digits of each number match something in your first-5-digits-from-A list, and if not, add that number from B to A.

- In contrast, to do strategy 2, you would have to store the full B list, and then as you're going through A, cross out the B items that are in A, and then add the non-crossed-out B items to A. Alternatively, you could store just the first 5 digits of the B list and cross out the first-5-digits-from-B items that are already in A. But then when going back to add the non-crossed-out items, you have to use the 5-digit abbreviations to look up the full 50-digit number in the original B list.

Tyranny of adults

14 Feb. 2017

From 2000 to 2005, I was a political junkie. For a time, I read the Albany Times Union newspaper every day after school, and I also followed a number of political blogs. Politics was a main subject of conversation among my friends. Yet, because I was only 17 in 2004, I wasn't able to vote in the 2004 US elections. Another political-junkie friend of mine commented on the unfairness of this.

I don't have a great solution to this problem, although plausibly the voting age should be lowered to 16 or 14. In principle, one could create a knowledge test to determine eligibility to vote, but this would be open to corruption and would probably disenfranchise many poor voters. The history of literacy tests for voting in the US is not pleasant. Still, perhaps voting eligibility could be disjunctive: either you're at least age 18, or you pass a knowledge test.

Beyond voting, there are many instances where adults may not actually be more qualified than children but have power over children anyway. Adults can be extremely immature in their own ways but unlike children don't have anyone (other than the government, employers, etc.) enforcing discipline. Adults mock the way children may want sugary foods and TV, but we could similarly mock adults for sometimes not having self-control in cases like sex and alcohol. (Of course, I'd rather not mock either group.) The difference is that adults get to indulge their cravings, while children are prevented from doing so (to varying degrees depending on the whims of their parents).

People sometimes express a desire to go back to childhood. I feel the opposite way. Being an adult is way more fun, since I don't have someone else dictating rules about how I have to live. As an adult, I can do basically anything I want whenever I want as long as I get my work done, whereas children are forced to follow strict and often exhausting daily schedules in school and then at home when completing homework.

Some children are lucky and have parents who don't impose too many rules. Other children are controlled by more stringent autocrats whose commands may be fairly arbitrary and hypocritical. (For example, "You have to go to bed, but I get to stay up late.") And of course, some of those autocrats use physical and emotional violence whenever they want to, without repercussion. Adults may also be ruled by the whims of their bosses, but adults can usually switch employers or report abuse to HR, while children have no similar recourse (except in cases of serious child abuse).

I'm not an expert on the youth-rights movement, but I probably support many of its proposals—at least pending empirical examination of what consequences those policies would actually have.

In an episode of the sitcom Dinosaurs, Earl (the father) reads the following statement: "Teenagers learn to make choices by having choices". I roughly agree with this, except in cases of potentially irreversible exploration, like trying an extremely addictive drug or injuring oneself by doing a stupid stunt.

There is legitimate science behind the idea that teen brains are still developing, and teenagers don't always have as much executive control over their actions. But, as with other forms of discrimination, these generalizations don't apply in every case. I think I was about as mature by age 13 as I am today at age 29 (in terms of good judgment and self-control, though not raw life experience and wisdom). Meanwhile, some 29-year-olds may not be mature enough to make their own choices. (And the example of the strikingly immature 70-year-old US President Donald Trump is well known.)

Why I personally don't like prize contests

22 Jan. 2017